Day – 1 -> Assignments / Tutorial for ( CVR Engg.)

Few basics

- How to check if a process is running or not

- ps -eaf | grep ‘java’ will list down all the process which uses java

- How to kill a process forcefully

- ps -eaf | grep ‘java’

- it will show the process ids of the process which uses java

- kill -9 ‘process id’

- it will kill the job with that process id

- ps -eaf | grep ‘java’

- What does sudo do –

- It runs the command with root’s privilege

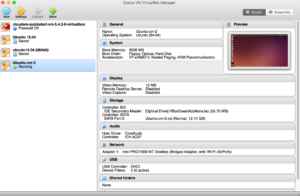

Start VM

- Start Virtual Box, choose your VM and Click on the Start Button

- Login as hduser

- Click on the right top corner and Chose Hadoop User.

- Enter password – abcd1234

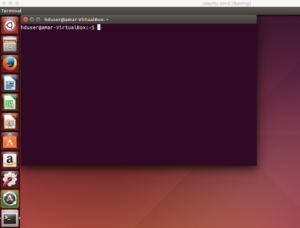

- Click on the Top Left Ubuntu Button and search for the terminal and click on it.

- You should see something similar as below

Start with HDFS

- Set JAVA_HOME and update PATH

- export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

- export PATH=$JAVA_HOME/bin:$PATH

- Start hadoop if not already started –

/usr/local/hadoop/sbin/start-all.sh

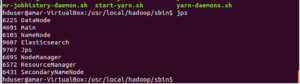

- Check if Hadoop is running fine

jps

it will list down the running hadoop processes.

o/p should look like below –

hduser@amar-VirtualBox:/usr/local/hadoop/sbin$ jps

14416 SecondaryNameNode

14082 NameNode

14835 Jps

3796 Main

14685 NodeManager

14207 DataNode

14559 ResourceManager

- Make directory for the purpose of demonstartion

The command creates the /user/hduser/dir/dir1 and /user/hduser/employees/salary

hadoop fs -mkdir -p /user/hduser/dir/dir1 /user/hduser/employees/salary

- Copy contents in to the directory. It can copy directory also.

hadoop fs -copyFromLocal /home/hduser/example/WordCount1/file* /user/hduser/dir/dir1

- The hadoop ls command is used to list out the directories and files –

hadoop fs -ls /user/hduser/dir/dir1/

- The hadoop lsr command recursively displays the directories, sub directories and files in the specified directory. The usage example is shown below:

hadoop fs -lsr /user/hduser/dir

- Hadoop cat command is used to print the contents of the file on the terminal (stdout). The usage example of hadoop cat command is shown below:

hadoop fs -cat /user/hduser/dir/dir1/file*

- The hadoop chmod command is used to change the permissions of files. The -R option can be used to recursively change the permissions of a directory structure.

Note the permission before –

hadoop fs -ls /user/hduser/dir/dir1/

Change the persission-

hadoop fs -chmod 777 /user/hduser/dir/dir1/file1

See it again –

hadoop fs -ls /user/hduser/dir/dir1/

- The hadoop chown command is used to change the ownership of files. The -R option can be used to recursively change the owner of a directory structure.

hadoop fs -chown amars:amars /user/hduser/dir/dir1/file1

Check the ownership now –

hadoop fs -ls /user/hduser/dir/dir1/file1

- The hadoop copyFromLocal command is used to copy a file from the local file system to the hadoop hdfs. The syntax and usage example are shown below:

hadoop fs -copyFromLocal /home/hduser/example/WordCount1/file* /user/hduser/employees/salary

- The hadoop copyToLocal command is used to copy a file from the hdfs to the local file system. The syntax and usage example is shown below:

hadoop fs -copyToLocal /user/hduser/dir/dir1/file1 /home/hduser/Downloads/

- The hadoop cp command is for copying the source into the target.

hadoop fs -cp /user/hduser/dir/dir/file1 /user/hduser/dir/

- The hadoop moveFromLocal command moves a file from local file system to the hdfs directory. It removes the original source file. The usage example is shown below:

hadoop fs -moveFromLocal /home/hduser/Downloads/file1 /user/hduser/employees/

- It moves the files from source hdfs to destination hdfs. Hadoop mv command can also be used to move multiple source files into the target directory. In this case the target should be a directory. The syntax is shown below:

hadoop fs -mv /user/hduser/dir/dir1/file2 /user/hduser/dir/

- The du command displays aggregate length of files contained in the directory or the length of a file in case its just a file. The syntax and usage is shown below:

hadoop fs -du /user/hduser

- Removes the specified list of files and empty directories. An example is shown below:

hadoop fs -rm /user/hduser/dir/dir1/file1

- Recursively deletes the files and sub directories. The usage of rmr is shown below:

hadoop fs -rmr /user/hduser/dir

Web UI

NameNode daemon

- http://localhost:50070/

Log Files

- http://localhost:50070/logs/

Explore Files

- http://localhost:50070/explorer.html#/

Status

- http://localhost:50090/status.html

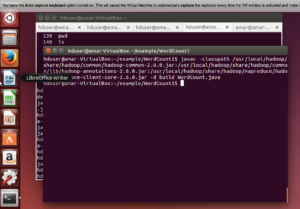

Hadoop Word Count Example

Go to home directory and take a look on the directory presents

- cd /home/hduser

- ‘pwd’ command should show path as ‘/home/hduser’.

- execute ‘ls -lart’ to take a look on the files and directory in general.

- Confirm that service is running successfully or not

- run ‘jps’ – you should see something similar to following –

- Go to the cd /home/hduser/example/WordCount1/

- Run command ‘ls’ – if there is a directory named ‘build’ please delete that and recreate the same directory. This step will ensure that your program does not uses precompiled jars and other files

- rm -rf build

- mkdir build

- Remove JAR file if already existing

- rm /home/hduser/example/WordCount1/wcount.jar

- Set JAVA_HOME and update PATH

- export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

- export PATH=$JAVA_HOME/bin:$PATH

- Build the example ( please make sure that when you copy – paste it does not leave any space between the command) –

- /usr/lib/jvm/java-7-openjdk-amd64/bin/javac -classpath /usr/local/hadoop/share/hadoop/common/hadoop-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar -d build WordCount.java

- Create Jar –

- jar -cvf wcount.jar -C build/ .

- Now prepare the input for the program ( please give ‘output’ directory your own name – it should not be existing earlier )

- Make your own input directory –

- hadoop dfs -mkdir /user/hduser/input

- Copy the input files ( file1, file2, file3 ) to hdfs location

- hadoop dfs -put file* /user/hduser/input

- Check if the output directory already exists.

- hadoop dfs -ls /user/hduser/output

- If it already existing delete with the help of following command –

- dfs -rm /user/hduser/output/*

- hadoop dfs -rmdir /user/hduser/output

- Make your own input directory –

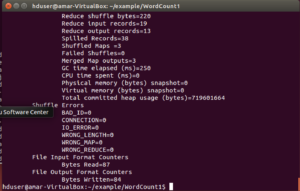

- Run the program

- hadoop jar wcount.jar org.myorg.WordCount /user/hduser/input/ /user/hduser/output

- At the end you should see something similar –

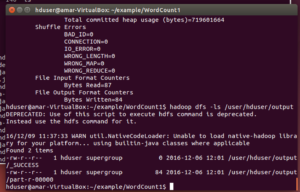

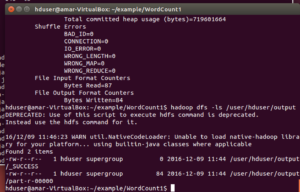

- Check if the output files have been generated

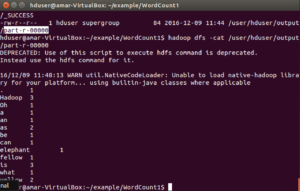

- hadoop dfs -ls /user/hduser/output – you should see something similar to below screenshot

- Get the contents of the output files –

- hadoop dfs -cat /user/hduser/output/part-r-00000

- Verify the word count with the input files-

- cat file1 file2 file3

- The words count should match.